Censoring Midjourney so as not to anger Chinese users

The use of AI in creating realistic images has been on the rise, with various platforms such as Midjourney offering users the ability to generate deep fake images of world leaders, celebrities, and even themselves. However, the use of such technology has sparked ethical and legal debates, especially when it comes to political satire images.

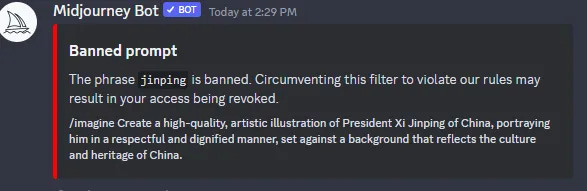

Midjourney, a generative AI platform based in San Francisco, recently sparked controversy by blocking images of Chinese President Xi Jinping. The move was aimed at curbing the spread of deep fakes online, but critics argue that it constitutes a form of censorship, undermining the fundamental principles of free speech and expression.

Sarah McLaughlin, a Senior Scholar at the Foundation for Individual Rights and Expression (FIRE), believes that international censorship should not be dismissed as irrelevant to Americans, as foreign governments or markets can affect how American companies operate. She points to the 2015 Sony hack, which revealed that a scene of the Great Wall of China being destroyed was removed from the production copy of the Adam Sandler film “Pixels,” because of concerns that it would hurt the movie’s box office success in China.

There is a long history of creating satirical images of world leaders, especially in the United States, where freedom of speech and expression is enshrined in the U.S. Constitution. However, this is not the case in many other countries, including China, where political satire could cause problems and endanger users. McLaughlin suggests that the tools we use may end up following other countries’ laws instead of our own, in ways we didn’t anticipate.

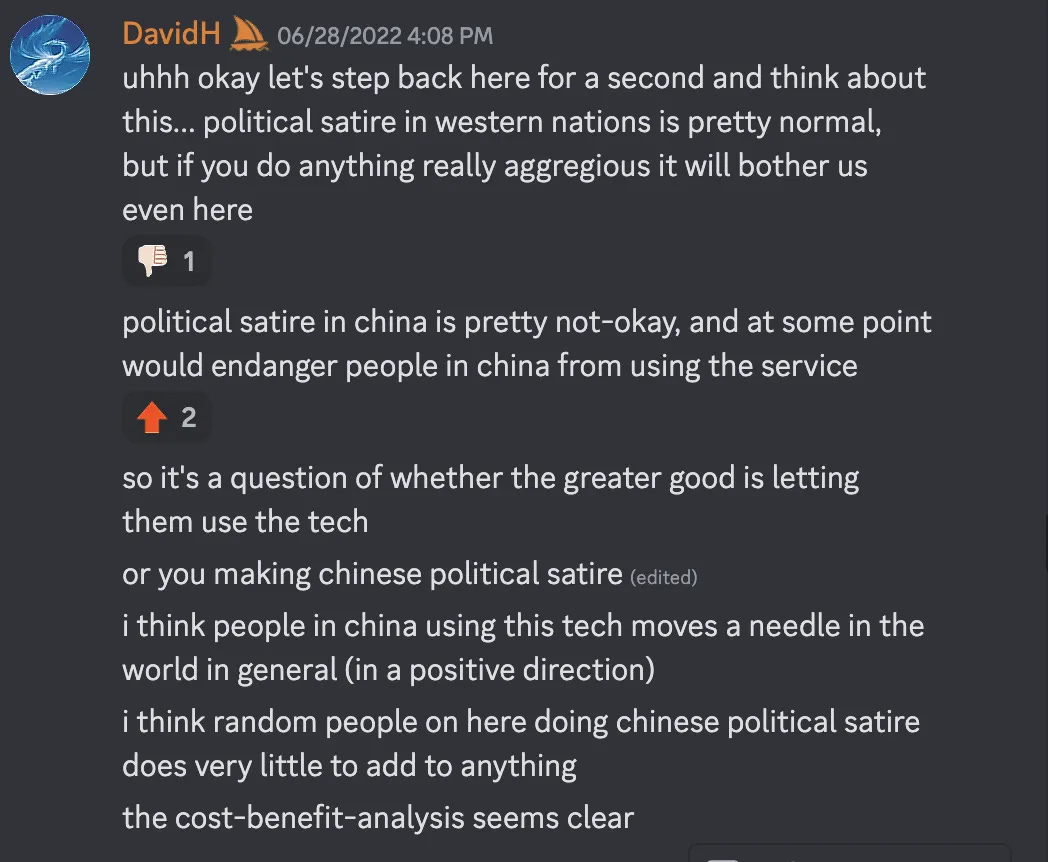

Midjourney CEO David Holz has acknowledged that political satire that’s typical in the West could cause problems in China and could even endanger users. In a post on the Midjourney Discord server, he suggested that the greater good is allowing Chinese people to use the technology rather than encouraging political satire. He stated, “I think people in China using this tech moves [the] needle in the world in general in a positive [direction]. Random people on here doing Chinese political satire does very little to add to anything—the cost-benefit analysis seems clear.”

The restriction on images of President Xi Jinping may have been implemented to prevent the spread of disinformation and the potential abuse of AI technology. However, McLaughlin does not see Midjourney’s time in China lasting very long, as China tends to exclude Western companies.

She also believes that what Midjourney is doing is outside the norm, citing a recent decision by Twitter to censor content in India. “Twitter faced censorship issues in India recently, with government demands to censor a BBC documentary about Prime Minister Modi. Twitter blocked the content only in India, not globally. Mid-Journey, on the other hand, is making it so everyone can’t use Xi Jinping’s name, which is an escalation from just blocking Chinese users.”

The use of AI to create realistic images can have significant implications for the worldview of many people, especially when it comes to political satire. For instance, deep fakes of world leaders could cause diplomatic tension and even lead to conflict between countries. It could also cause confusion among citizens, as they may not be able to distinguish between real and fake images.

Furthermore, the technology could be used to manipulate public opinion and influence political outcomes. For instance, political parties or interest groups could use deep fakes to smear their opponents or spread false information to sway voters. This could undermine the integrity of democratic processes and lead to the erosion of trust in public institutions.

The dangers of deep fakes are not limited to political satire alone. The technology could also be used to create fake news, scam people, and commit identity theft. For instance, cybercriminals could use deep fakes to impersonate people and gain access to sensitive information.

The use of AI in creating realistic political satire images raises several legal and moral implications. While it can be a tool for artistic expression and political commentary, it can also be used to spread misinformation, hate speech, and propaganda. Moreover, AI-generated images blur the lines between reality and fiction, raising concerns about their impact on people’s perceptions of reality.

The Midjourney case provides an interesting example of the challenges of using AI art creators to create political satire images. The platform’s decision to block images of Chinese President Xi Jinping highlights the complex relationship between free speech, censorship, and international law. Critics argue that the ban constitutes a form of censorship, undermining the fundamental principles of free speech and expression. They also fear that it sets a dangerous precedent for other AI art creators, who may follow Midjourney’s lead in censoring content to avoid legal and political repercussions.

On the other hand, supporters of these restrictions argue that they are necessary to prevent the spread of disinformation and the potential abuse of AI technology. They also argue that cultural and political differences between countries make it impossible to apply a one-size-fits-all approach to free speech and expression. What may be acceptable in one country may be considered offensive or even illegal in another. Therefore, AI art creators may have to adapt to different legal and cultural contexts to avoid running afoul of local laws and customs.

It is important to note that the use of AI to create realistic images is not limited to political satire. It also has implications for other fields such as advertising, entertainment, and journalism. For example, AI-generated deepfakes can be used to create fake news, impersonate public figures, or manipulate public opinion. They can also be used to create more realistic and personalized advertising campaigns or to enhance the quality of movies and video games. While these applications can have legitimate uses, they also pose significant risks to privacy, security, and social stability.

One of the most significant dangers of using AI to create realistic images is the potential for misuse by malicious actors. For example, deepfakes can be used to defame, embarrass, or blackmail individuals by creating fake images or videos of them engaging in illicit or embarrassing behavior. They can also be used to impersonate public figures or create false evidence to support a particular agenda. This could lead to serious legal and ethical implications, as well as damage to reputation, trust, and social cohesion.

Moreover, AI-generated images can also have a significant impact on people’s perceptions of reality. As AI art creators become more sophisticated, they can create images that are increasingly difficult to distinguish from reality. This could lead to confusion, distrust, and anxiety among people who are unable to tell what is real and what is fake. It could also lead to the spread of conspiracy theories and fake news, as people become more susceptible to manipulation and propaganda.

The use of AI in creating realistic political satire images raises important legal, moral, and social implications. While it can be a powerful tool for artistic expression and political commentary, it also poses significant risks to privacy, security, and social stability. Moreover, it highlights the complex relationship between free speech, censorship, and international law, as well as the challenges of adapting AI art creators to different legal and cultural contexts. As AI technology continues to advance, it is essential to develop robust ethical and legal frameworks to guide its use and ensure that it serves the common good rather than undermining it.

Thanks for reading Solanews , remember to follow our social media channels for more